KDE Optimization Primer

In statistics, the univariate kernel density estimation (KDE) is a non-parametric way to estimate the probability density function of a random variable X, a fundamental data smoothing problem where inferences about the population are made, based on a finite data sample.

Why use KDE?

Using a kernel instead of discrete probabilities, we promote the continuity nature of the underlying random variable.

How do we calculate KDE?

In a nutshell, we place a weight (aka a kernel) function at each observation, and the probability density function is expressed as follows:

Where:

- $k(.)$ is the kernel function.

- $h$ is the bandwidth or the kernel window width.

- $N$ is the number of observations.

For the $\hat{f}\left(x\right)$ to be a probability function, the kernel function must be a non-negative, symmetric, integrable function made of real values.

Hence:

To proceed with KDE, you’ll need to decide on two key parameters: Kernel function and bandwidth.

How to Compute Optimal Bandwidth?

For a given data sample and a kernel function, we need a consistent data-driven method to compute the optimal bandwidth value.

How do we determine the optimality of one kernel (and bandwidth) versus another? We need first to quantify the accuracy of the kernel estimators.

For a given $x$, define the mean squared error of a kernel density estimator as follows:

Where:

$f(x)$ is the true (unknown) underlying probability function.

Let’s expand the quadratic form:

Add and subtract the $2E\left(\hat{f}\left(x\right)\right)^2$ term, and re-arrange the terms:

Notes:

- The $(\mathbb{E}(\hat{f}(x))-f(x))$ term compares the expected density value to the actual one. This term is referred to as the “bias.”

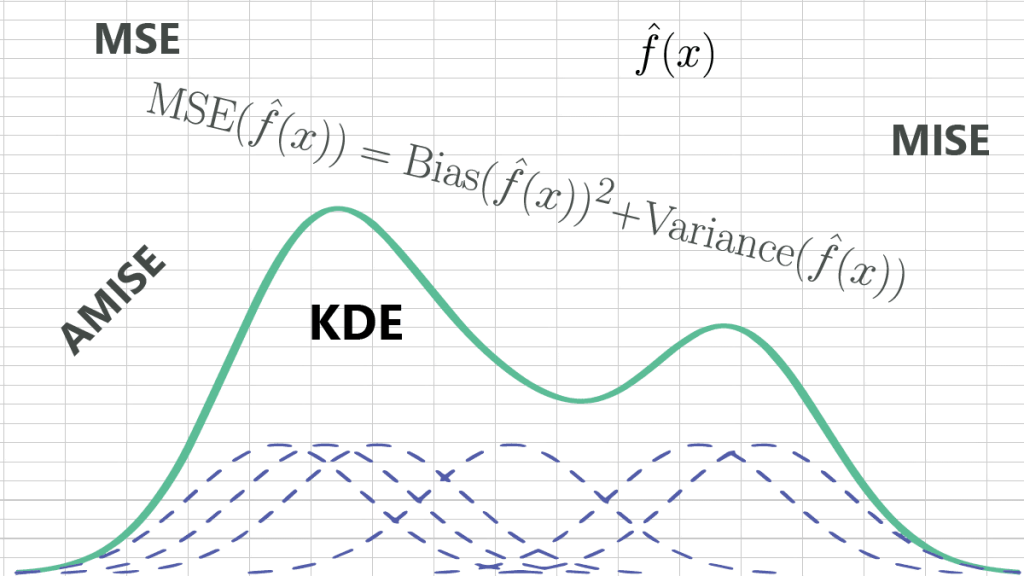

- The second term is the variance of the $\hat{f}(x)$ itself. $$\text{MSE}(\hat{f}(x))=\text{Bias}{{(\hat{f}(x))}^{2}}+\text{Variance}(\hat{f}(x))$$

For all values of $x\in (-\infty ,\infty )$, we define the mean integrated squared error (MISE):

Let’s apply the definition above to KDE:

- $\mathbb{E}(\hat{f}(x))$: $$\mathbb{E}(\hat{f}(x))=\frac{1}{n}\sum\limits_{i=1}^{n}{\mathbb{E}}(\frac{1}{h}K(\frac{x-{{X}_{i}}}{h}))$$ Assuming $\{{{x}_{i}}\}$ are independent and identically distributed ($i.i.d$): $$\mathbb{E}(\hat{f}(x))=\frac{1}{n}\sum\limits_{i=1}^{n}{\frac{1}{h}}\int_{-\infty }^{\infty }{(K(}\frac{x-t}{h}))f(t)dt=\frac{1}{h}\int_{-\infty }^{\infty }{K}(\frac{x-t}{h})f(t)dt$$ $$\mathbb{E}(\hat{f}(x))=\frac{1}{h}\int_{-\infty }^{\infty }{K}(\frac{x-t}{h})f(t)dt=\frac{1}{h}\int_{-\infty }^{\infty }{h}K(z)f(x-hz).dz$$ Using Taylor’s expansion: $$f(x-hz)=f(x)-hz{{f}^{(1)}}(x)+\frac{{{(hz)}^{2}}}{2}{{f}^{(2)}}(x)+O({{h}^{2}})$$ $$\mathbb{E}\left[ \hat{f}(x) \right]=\int_{-\infty }^{\infty }{K}(z)(f(x)-hz{{f}^{(1)}}(x)+\frac{{{(hz)}^{2}}}{2}{{f}^{(2)}}(x)).dz+O({{h}^{2}})$$ $$\mathbb{E}\left[ \hat{f}(x) \right]=f(x)+\text{ }\frac{{{h}^{2}}{{f}^{(2)}}(x)}{2}{{\mu }_{2}}(K)+O({{h}^{2}})$$ Where ${{\mu }_{2}}(K)$ is the second moment of the kernel function (i.e., ${{\mu }_{2}}(K)=\int_{-\infty }^{\infty }{{{z}^{2}}}K(z)dz$). Thus, the $\text{Bias}(\hat{f}(x))$ of the KDE is expressed as follows: $$\text{Bias}(\hat{f}(x))=\text{ }\frac{{{h}^{2}}{{f}^{(2)}}(x)}{2}{{\mu }_{2}}(K)+O({{h}^{2}})$$

- $\text{Var}(\hat{f}(x))$: Now, let’s compute the density estimate variance: $$\text{Var}(\hat{f}(x))=\text{Var}\left( \frac{1}{nh}\sum\limits_{i=1}^{n}{K}(\frac{x-{{X}_{i}}}{h}) \right)$$ Assuming $\{{{x}_{i}}\}$ are independent and identically distributed ($i.i.d$), the covariance terms are all zeros. $${\rm{Var}}(\hat f(x)) = {1 \over {{{(nh)}^2}}}\sum\limits_{i = 1}^n {{\rm{Var}}} \left( {K({{x – {X_i}} \over h})} \right)$$ But: $${\text{Var}}\left( {K(\frac{{x – t}}{h})} \right) = {\Bbb E}\left[ {K{{(\frac{{x – t}}{h})}^2}} \right] – {\Bbb E}{\left[ {K(\frac{{x – t}}{h})} \right]^2}$$ $${\rm{Var}}\left( {K({{x – t} \over h})} \right) = \int_{ – \infty }^\infty K {({{x – t} \over h})^2}f(t)dt – {\left[ {\int K ({{x – t} \over h}f(t)dt)} \right]^2}$$ Putting it back into ${\rm{Var}}(\hat f(x))$ equation above: $${\rm{Var}}(\hat f(x)) = {1 \over {{{(nh)}^2}}}\sum\limits_{i = 1}^n {\int_{ – \infty }^\infty K } {({{x – t} \over h})^2}f(t)dt – {\left[ {\int K ({{x – t} \over h})f(t)dt)} \right]^2}$$ $${\rm{Var}}(\hat f(x)) = {1 \over {n{h^2}}}\left( {\int_{ – \infty }^\infty K {{({{x – t} \over h})}^2}f(t)dt – {{\left[ {\int K ({{x – t} \over h})f(t)dt)} \right]}^2}} \right)$$ let’s define $z = {{x – t} \over h}$: $${\rm{Var}}(\hat f(x)) = {1 \over {nh}}\int K {(z)^2}f(x – zh)dz – {1 \over n}{\left[ {\int K (z)f(x – zh)dz)} \right]^2}$$ Using Taylor’s expansion: $${\rm{Var}}(\hat f(x)) = {1 \over {nh}}\int K {(z)^2}(f(x) – hz{f^{(1)}}(x) + {{{{(hz)}^2}} \over 2}{f^{(2)}}(x) + O({h^2}))dz – $$ $${1 \over n}{\left[ {\int K (z)(f(x) – hz{f^{(1)}}(x) + {{{{(hz)}^2}} \over 2}{f^{(2)}}(x) + O({h^2}))dz)} \right]^2}$$ $${\text{Var}}(\hat f(x)) = \frac{{f(x)R(K)}}{{nh}} + \frac{h}{{2n}}{f^{(2)}}(x)\int {{z^2}} K{(z)^2}dz – \frac{{{\Bbb E}{{[\hat f(x)]}^2}}}{n}$$ Where: $$R(K) = \int K {(z)^2}dz$$ $${\text{Var}}(\hat f(x)) = \frac{{f(x)R(K)}}{{nh}} + \frac{{{\Bbb E}{{[\hat f(x)]}^2}}}{n}$$

- Putting it together, the mean squared error (MSE) of the KDE is computed as follows: $${\rm{MSE}}(\hat f(x)) = {\rm{Bias}}{(\hat f(x))^2} + {\rm{Variance}}(\hat f(x))$$ $${\text{MSE}}(\hat f(x)) = {\text{ }}\frac{{{h^4}{f^{(2)}}{{(x)}^2}\mu _2^2(K)}}{4} + \frac{{f(x)R(K)}}{{nh}} + \frac{{{\Bbb E}{{[\hat f(x)]}^2}}}{n} + O({h^2})$$ $${\rm{MSE}}(\hat f(x)) \approx {\rm{ }}{{{h^4}{f^{(2)}}{{(x)}^2}\mu _2^2(K)} \over 4} + {{f(x)R(K)} \over {nh}}$$

- Integrating over $x \in ( – \infty ,\infty )$ and considering only the dominating part of the MISE (asymptotic): $${\rm{AMISE}}(\hat f(x)) \approx {\rm{ }}{{{h^4}\mu _2^2(K)R({f^{(2)}})} \over 4} + {{R(K)} \over {nh}}$$

Please note that AMISE still has an unknown constant term $R({f^{(2)}})$, which is dependent on the unknown underlying true probability distribution function:

For now, let’s assume that we can somehow estimate the unknown constant term $R({f^{(2)}})$.

Examining the AMISE formula, we can see that as the bandwidth value (i.e., $h$) increases, the first term increases, but the second term decreases. There is an optimal bandwidth value $h$ that minimizes the AMISE value.

Take the 1st derivative of the AMISE and make it equal to zero:

Rearrange the terms:

Substitute the ${h_{opt}}$ back into the AMISE equation, the minimum AMISE value is expressed as follows:

Which Kernel Should I Use?

There are many kernel functions that satisfy the conditions described above (e.g., non-negative, symmetric, real-values, and integrable) to choose from. For example: Uniform, triangular, Gaussian, biweight, triweight, Epanechnikov, cosine, etc.

In the ${\rm{AMISE}}({h_{{\rm{opt}}}})$ formula, we notice the term $R{(K)^4} \times \mu _2^2(K)$, which is solely dependent on the selected kernel function $K$. How do we quantify the effect of the kernel function on the optimal KDE?

To answer this question, let’s first define a new term: kernel efficiency. Using the Epanechnikov kernel as a baseline, the kernel $K$ efficiency is defined as follows:

Substituting the optimal AMISE formula:

The higher the efficiency, the lower the optimal AMISE value.

Let’s examine the efficiency of a few kernel functions:

| Kernel | ${\mu _2} = \int {{x^2}} K(x)dx$ | $R(K) = \int {{K^2}(x)dx} $ | ${\rm{Eff}}(K)$ |

|---|---|---|---|

| Epanechnikov | ${1 \over 5}$ | ${3 \over 5}$ | 100% |

| Uniform | ${1 \over 3}$ | ${1 \over 2}$ | 92.95% |

| Triangular | ${1 \over 6}$ | ${2 \over 3}$ | 98.6% |

| Quartic (Biweight) | ${1 \over 7}$ | ${5 \over 7}$ | 99.4% |

| Triweight | ${1 \over 9}$ | ${{350} \over {429}}$ | 98.7% |

| Gaussian | 1 | ${1 \over {2\sqrt \pi }}$ | 95.1% |

| Cosine | $1 - {8 \over {{\pi ^2}}}$ | ${{{\pi ^2}} \over {16}}$ | 99.9% |

What do those values mean? Although using the Epanechnikov kernel can give the lowest optimal AMISE, the difference of the AMISE using other kernels is relatively small.

Silverman (Rule-of-Thumb)

In the optimal bandwidth and the AMISE formula, we were left with one unknown term: $R({f^{(2)}})$, which is dependent on the unknown true probability distribution – the same function that we are trying to estimate with the KDE.

How do we go about estimating this $R({f^{(2)}})$? Let’s assume that the underlying distribution is Gaussian (i.e., $x \sim N(\mu ,{\sigma ^2})$). This is referred to as the Silverman rule-of-thumb.

The ${f^{(2)}}(x)$ is expressed as follows:

The $R({f^{(2)}}(x))$ is calculated as follows:

Substituting into the optimal bandwidth formula:

The only term we need to estimate is the $\sigma $. To avoid the effect of potential outliers, we estimate $\sigma $ as the minimum of the sample standard deviation $s$ and the standardized interquartile range (${\sigma _{{\rm{IQR}}}}$).

Where:

- ${\sigma _{{\rm{IQR}}}}$ is defined as follows: ${\sigma _{{\rm{IQR}}}} = {{Q3 – Q1} \over {{\Phi ^{ – 1}}(0.75) – {\Phi ^{ – 1}}(0.25)}} = {{Q3 – Q1} \over {1.34}}$

- ${\Phi ^{ – 1}}(.)$ is the inverse normal cumulative distribution function.

Assuming we use a Gaussian kernel function ($R(K) = {1 \over {2\sqrt \pi }}$ and ${\mu _2}(K) = 1$), the optimal bandwidth can be expressed as follows:

For non-Gaussian kernel functions, the optimal bandwidth is calculated as follows:

| Kernel | ${h_{{\rm{opt}}}}$ |

|---|---|

| Uniform | $\hat \sigma \times \root 5 \of {{{12\sqrt \pi } \over n}} = {{1.8431\hat \sigma } \over {\root 5 \of n }}$ |

| Triangular | $\hat \sigma \times \root 5 \of {{{64\sqrt \pi } \over n}} = {{2.576\hat \sigma } \over {\root 5 \of n }}$ |

| Quartic (Biweight) | $\hat \sigma \times \root 5 \of {{{280\sqrt \pi } \over {3n}}} = {{2.778\hat \sigma } \over {\root 5 \of n }}$ |

| Triweight | $\hat \sigma \times \root 5 \of {{{3150\sqrt \pi } \over {143n}}} = {{2.0812\hat \sigma } \over {\root 5 \of n }}$ |

| Epanechnikov | $\hat \sigma \times \root 5 \of {{{40\sqrt \pi } \over n}} = {{2.3449\hat \sigma } \over {\root 5 \of n }}$ |

| Cosine | $\hat \sigma \times \root 5 \of {{{{\pi ^6}\sqrt \pi } \over {6{{({\pi ^2} - 8)}^2}n}}} = {{2.4097\hat \sigma } \over {\root 5 \of n }}$ |

Although the assumption behind the rule-of-thumb is rather simplistic, we often use this method as a starting point for more sophisticated bandwidth estimation methods (e.g., direct plug-in).

References

- Silverman, B.W. (1986). Density Estimation for Statistics and Data Analysis. Chapman & Hall/CRC London.

- W. Zucchini, Applied smoothing techniques, Part 1 Kernel Density Estimation., 2003.

- Byeong U. Park and J. S. Marron. Comparison of Data-Driven Bandwidth Selectors. Journal of the American Statistical Association Vol. 85, No. 409 (Mar., 1990), pp. 66-72 (7 pages).